Exploring Tensors: The Heart of TensorFlow Computations

TensorFlow is an open-source library that empowers you to implement machine learning manually. The name 'TensorFlow' is derived from its core functionality, where we work with Tensors.

What is a Tensor ?

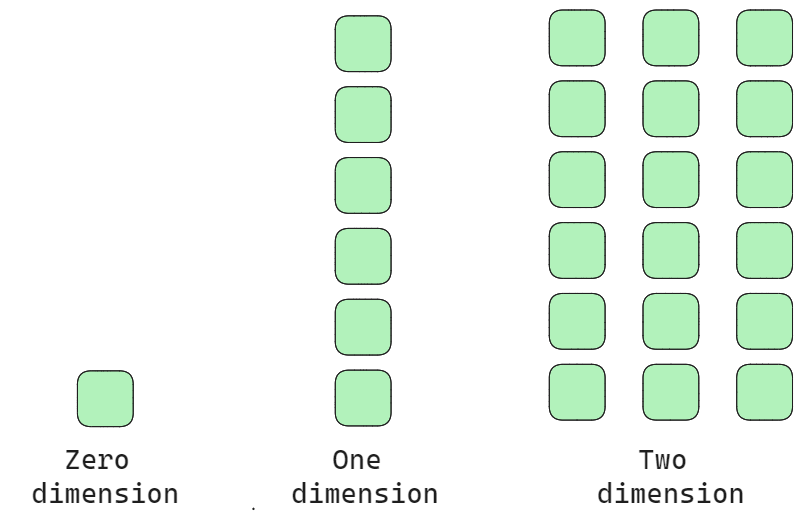

Tensors are the fundamental computational units in TensorFlow. Simply put, a tensor is an array of numbers that can have zero, one, or more dimensions. Let's break it down further.

Structure of the tensor could be defined by two properties :

Structure of a Tensor:

Rank: The dimension of the array required to represent the tensor. For instance:

Zero dimension: a single constant.

One dimension: a 1D array.

Two dimensions: a 2D array.

Shape: Defined as the number of blocks in each dimension. For example, a two-dimensional array with a shape of (6,3) means it has 6 blocks in the first dimension and 3 blocks in the second dimension.

These properties are crucial for analyzing and understanding tensors.

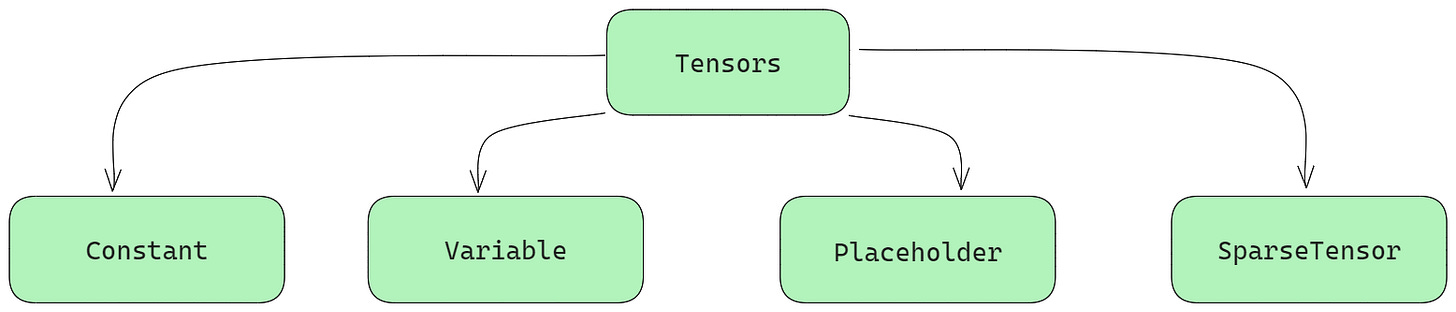

Types of tensors :

Every machine learning engineer and data scientist should be familiar with the different types of tensors based on the data fed into them.

Constant tensor : It is the type of tensor whose data will remain unchanged throughout in the process of training i.e. they are immutable after creation

c = tf.constant([1.0, 2.0, 3.0], dtype=tf.float32)

Variable tensor : Variable tensor holds values that can be changed during the execution of the program. They can be used as weights in a neural network during training.

v = tf.Variable([1.0, 2.0, 3.0], dtype=tf.float32)

Placeholder tensor : Tensor that is assigned data on later data i.e. it allows us to create operations and build computations, without feeding the data into it. There values must be fed during the session run.

p = tf.compat.v1.placeholder(dtype=tf.float32, shape=[None, 3])

SparseTensor : A SparseTensor is a specialized data structure in TensorFlow used to efficiently represent and manipulate tensors with a significant number of zero values. Unlike dense tensors, which store every element explicitly, SparseTensor focuses on storing only the non-zero elements and their positions, making it more memory-efficient and faster for certain operations.

Define the indices of the non-zero values

indices = [[0, 0], [1, 2], [2, 3]]

Define the corresponding non-zero values

values = [1, 2, 3]

Define the shape of the dense tensor

dense_shape = [3, 4]

Create the SparseTensor

sparse_tensor = tf.SparseTensor(indices=indices, values=values, dense_shape=dense_shape)

Conclusion :

That's a wrap on tensors, their structures, and their types. If you're enjoying this deep dive into machine learning and want to hear more, subscribe to our newsletter for the latest updates!

Thanks for reading Coding and Circuits! Subscribe for free to receive new posts and support our work.Thanks for reading Code and Circuits! Subscribe for free to receive new posts and support my work.